Back in 2016, Ajoy Mani explained the visual effects work on Mechanic: Resurrection. He went on to work on several projects including The Yellow Birds, The Upside, Happy! and Emergence.

How did you get involved on this movie?

Shea Kammer, Executive Producer sent me the script in December 2019 for a breakdown and budget estimate. I had collaborated with Shea previously on a feature film. Once Swan Song got the go ahead for production, I got the call to fly to Vancouver to join the project in February 2020.

How was the collaboration with Director Benjamin Cleary?

Ben wrote the script and had been visualizing the movie for many years before we went to camera. As a result, he had thought about every aspect of the film and had detailed documents for what he wanted for all the tech in the movie. As a first-time feature director, understandably it was on me as the VFX department head to earn his trust and bring his vision alive.

We started prep in Vancouver in February 2020. Four weeks in, the pandemic shutdown started, and we all had to fly back home. We did not know where we stood at that point. Ben went to Los Angeles, and I was in New York. Regardless of when we would pick up the project again, the lull was a good time to get into world building and the nitty gritty of the design features for AR during this downtime. We got approval to engage Territory to design the layout and look and feel of the AR systems in the film. The process was done remotely, but it helped build confidence and rapport with Ben.

That is his first VFX feature. How did you help him with that?

It is Ben’s first feature, but he was not entirely alien to VFX. Ben is actively involved in the VR space, so he does understand the basics of VFX. The key was to gain Ben’s trust so that he would entrust me to bring his vision alive. Be his agent in the VFX universe. The 6 months break we had due to the pandemic lockdown went a long way to developing the relationship and trust required. We would communicate daily during this time.

What was his approach and expectations about the visual effects?

We used the pandemic break wisely. Especially with everyone working remotely, storyboards became a key to keep Ben’s vision clear. I had requested storyboards for every single VFX scene. Ben worked with a storyboard artist and got this done. This was hugely helpful to design the sequences.

By the time we got back to Vancouver in September 2020, we were quite well prepared. Masanobu Takayanagi, our DP joined us shortly thereafter. Ben, Masa and I would spend time going over key VFX sequences and design our approach carefully to fit our schedule. This helped strengthen Ben’s confidence in our approach. Ben was very measured in his view of the future world in 2040. Rooted in real world progressions. The tech was always complimentary and never took the front stage. No gimmicks. Even the twinning sequences we designed in a realistic way. Never overplaying it.

How did you organize the work with your VFX Producer?

I was the overall VFX supervisor and producer for the show from prep in February 2020. As we were working during the pandemic in Vancouver, New York, Dublin, and Toronto, it was hard to find a producer willing to join us at the height of the pandemic. I had run numerous shows as the Supervisor and Producer before, and I stepped into the dual role for this film as well.

During post, the director was offered an additional four weeks for editing and our delivery deadline was truncated. This cut into the time required for VFX. As a result of the crunch, I requested to bring on a VFX producer to help update the producers and studio. We hired Bryce Nielsen, a Berlin based VFX Producer for the second half of post, primarily as point to keep the producers and studio abreast of VFX progress. This freed me up to focus on supervisory duties with the director and vendors during the tight delivery deadline we had.

I use a proprietary VFX management system that manages all aspects of the client side VFX process. All team members were able to remote login and get a dynamic global view, with a summary of daily tasks.

How did you choose the various vendors?

The story is set in the Pacific Northwest, which has a unique microclimate. The weather, flora and geography are very particular to the area. As a result, it was important to have our key vendor based in Vancouver, so the artists are familiar with the look. The obvious choice was Image Engine which is known for their artistic flair and execution. They managed the Lab One sequence, concession bot in the train, and the full CG Steed autonomous vehicles.

As we were editing the feature in Dublin, we also leaned on Screen Scene VFX which is based there for additional VFX. Ben is from Dublin, and he had worked with them before. We were editing at Screen Scene, so it was useful to have a vendor close. Screen Scene has experience with face replacements and aging, so they were a good fit for all the twinning sequences, train sequence and Arra House set extensions.

For all the AR, we brought on Territory out of London. We engaged them early as we collaborated with them to design the AR in the movie during the pandemic lockdown before production. Territory specializes in cinematic motion graphics and was a good fit.

Ben is quite keen on solid design and graphics. As this was an area of immense importance to Ben, I reached out to one of the top traditional design firms in the world. We hired Pentagram out of New York to design the fonts and style guide for the content on the AR screens. Natasha Jen led the Pentagram team.

When our delivery deadline was truncated from twelve weeks to six weeks from turn over, I brought on two additional vendors to help speed up the delivery. Basilic Fly from Chennai took on the challenge to handle most of the roto, paint, and track for our other vendors, as well as some light compositing. Additional work was performed by Company 3 VFX out of Toronto.

Can you tell us how you split the work amongst the vendors?

Once it was clear our deliver deadline was being truncated so that the film was delivered in time for the awards season, it was clear we needed to spread the work amongst our vendors diligently.

The heavy lift for the CG autonomous vehicle and Lab One which features a classic Pacific Northwest look was addressed by Image Engine.

The twinning, train exterior views and Arra House set extensions for exterior shots were assigned to Screen Scene. They have a lot of experience with face replacement and de-aging which made them a good fit for twinning work.

Pentagram designed the fonts and style guide for the AR screens. Territory incorporated these into the AR screens and designed the layout and motion of these screens. Territory was also in charge of the graphics on the memory device.

Additional set replacement and extensions were done by Company 3 VFX, and light compositing, roto, paint, and track work were provided by Basilic Fly.

How was the collaboration with the VFX Supervisors?

When I first spoke to Shawn Walsh, the GM at Image Engine, he kindly offered the services of Thomas Schelesny, VFX Supervisor at Image Engine to lend a hand on set. Thomas was with us for all set days where we had the Steed vehicles and Lab One. This was immensely helpful as what was shot was perfectly optimized for what Image Engine needed to turn around the work without jumping hoops. This also built a good rapport between Thomas and me.

While in prep, once Masa, Ben and I had worked out the twinning scenes, we shot tests for all the many ways we planned to execute the twinning. We sent these to Screen Scene in Dublin. Their VFX Supervisor, Ed Bruce then got us the results which went a long way to informing us on how best to execute the twinning. The tests were helpful to designing the twinning sequences without overreaching or overplaying our hand

For the AR screens, we collaborated closely with Natasha Jen at Pentagram for the style guide and Sam Keehan the supervisor at Territory. We had zoom calls twice a week with both Pentagram and Territory during the thick of post to develop the AR and graphics for the movie.

Company 3 VFX came on board after turnover to take on additional work to get us to our deadline. Rickey Verma, VFX Supervisor at CO3 VFX and I chatted on what was required, and he was able to get up to speed quite fast.

Basilic Fly has been a part of my vendor mix on numerous projects for VFX support work and was able to jump right in on Swan Song as well.

Where were various parts of the movie filmed, especially the Lab in the forest?

The Lab One interiors were designed as set pieces by our production designer; Annie Beauchamp, based on feedback from Ben. The exterior views of the building were done fully in CG by Screen Scene in post after edit. Annie and her team studied Ben’s brief and sent us concept sketches for how she envisioned the Arra House nestled in the forests of the island. I would then markup annotated frames for each shot, which Screen Scene would execute.

Arra Labs footprint is underground. The parts that are visible above ground are part of the Arra House. As the lab is doing illegal cloning work, they need to have the smallest footprint on the surface so as not to grab attention. Ben had a noticeably clear brief for us on how he envisioned this. Parts of the house would pop up on the surface in and coast of the island. All the deep cloning work is done underground.

Lab One is underground but has a large screen that shows a view from the island exterior matching time of day. This view was created by Image Engine as a 2.5D digital matte painting, based on references provided by Ben.

Can you tell us more about the LED screen? How did you create the stunning exterior view of the Lab?

When we were designing Lab One in prep, the DP, Masa and I pushed for a LED wall for the Lab One view rather than a blue screen for later replacement. The reason for this was manifold. The large “window” view was going to be the primary lighting for the room. The room was also surrounded by large glass panes and a reflective floor. If we put a blue screen out there, the interior would be flat and lifeless from a lighting and reflection point of view. Not to mention a lot of screen spill. By having a 1080p, 28 feet by 9 feet LED wall, we addressed these issues.

Ben also wanted the view to have parallax and be super high resolution to the point where it would be undiscernible from a real view. We deep dived into the tech based on what we have now and what could be possible in 20 years. The screen in the movie would be a lenticular screen. Based on each person’s position, the viewer would have a unique parallax. We commissioned Image Engine to develop the view for four times of day that we are in the lab.

Image Engine built it as a 2.5D view from director’s brief and references we provided them from the British Columbia coast. We piped the view for each scene based on time of day when we shot it. As the playback was 1080p, in post we replaced the view in each shot with a full 4K version.

What was your approach about the twinning creation?

I have had experience with twin work over two decades on various shows. This had taught me to approach twinning shot for shot rather than a one size fits all approach. Ben, Masa and I storyboarded all the twinning sequences during prep. Then we broke down the approach for each shot.

Can you elaborate about the twinning shots creation?

As we had a tight shooting deadline, we decided not to use a motion control rig which would take up valuable time to set up. Cameron is the name of the protagonist; his clone is named Jack. For all methods employed, we always started with the lead character for the scene. If Cam was leading the shot, then we would shoot with Mahershala as Cam first. Shane would play Jack. The twinning shots were created using the following methods:

Split screen: For simple lock of shots, we had Mahershala, and his twinning double do the scene as both Cam and Jack. Always starting with the character who leads the scene. This sets the tone for the scene. Simple split screen merge in post.

Merge plates from repeating head: This was used only for two shots in the movie when Cam and Jack come down the stairs. As this has a tilt and pan at the same time, it exponentially increases its difficulty to merge. As a result, a repeating head was a satisfactory solution for success.

Merge on pan/tilt: It’s incredible how well you can merge two plates on a tilt or pan if it is shot by a competent camera operator. A-cam was operated by our DP, Masanobu Takayanagi and B-cam by Peter Wilke. Both were exceptional at repeating the trajectory of shots with robotic precision.

Merge on dolly/truck/pedestal: Same as merge on Pan/Tilt, but this time the success of the shot depends additionally on the accuracy of the grip moving the camera for consistent speed.

Face replacement: This is by far the trickiest. I made sure to do a full body scan of both Mahershala and his twinning double, Shane Dean while we were in production in various clothing used throughout the movie. This was then used to track the double and replace with Mahershala’s face scan. All shots where I expected face replacement, I also made sure to acquire HDRI’s to light the replacement head. Composite side morph was also employed to match face profiles on a few shots.

During prep we shot tests for all the above twinning methods and commissioned Screen Scene who was allocated the twinning shots to do the merges. This informed us on how best to optimize our methodology and gave confidence to Ben that these could be achieved without a motion control rig. As a result of this thorough planning, every single shot for twinning was perfectly shot and snapped together in edit even before VFX got their hands on it.

What was the main challenge with the twins?

The biggest challenge primarily in twinning was to get a good twinning double. I made this exceedingly clear early in the process and Ben made sure that all twinning candidates were first approved by VFX. This process was particularly arduous as getting talent during pandemic when travel was limited was an issue. We screened countless candidates. Besides the candidate meeting my technical requirements for VFX, he also had to meet Mahershala’s approval as he would have to interact with him comfortably. We went through a plethora of candidates till we found Shane Dean. Shane matched Mahershala physically for build, height, and skin tone. Once Shane was technically vetted, Ben and Mahershala vetted him for the role.

Mahershala has noticeably identifiable earlobes. I wanted to avoid having to do twinning work for over the shoulder shots. I requested a prosthetic earpiece for Shane. As luck would have it, Mahershala had prosthetics made for another show. We reached out to the prosthetic team who did that to make a prosthetic piece they could attach to Shane’s ears. This allowed us to get away with a lot of the over the shoulder work in camera. For a few of the over the shoulder shots, we did have to adjust the back of his head and tweaks to the neck and face profile where we could still sense Shane.

Twinning work takes a lot from an actor. It can be emotionally draining to switch characters. Even though Jack is a perfect physical clone of Cam, there are nuanced differences in their performances. Cam is more dominant. Jack knows he is a clone; he is therefore a bit more submissive as he is adapting to his role.

We would always shoot the leading character for a scene first. Shoot the whole scene out for all set ups with actors in one role. We would mark the position for each camera, for each setup, as we would have to precisely replicate it once the actors switch roles. The actors would then go and do a costume change to switch characters and emotionally prepare. Then we would shoot out the whole scene top down for the B-plate.

The key was always to make sure the eyeline for both plates matched perfectly. We made sure to mark the floor where actors stood, so when we switched positions, they lined up perfectly. Rather than dot the whole floor with tape, I used UV markers. This worked well for us to have a lot of notations and marks on the floor and walls that were invisible to the camera.

The toughest twinning sequence was the one in the Lab where they get into a physical altercation. As they were right on top of each other, this was mostly managed using face replacement. For each of these shots, I did switch actors and get the B-plate, so we had the option to composite rather than 3D face replace. Where compositing did not work, it offered us a good reference for the 3D face replacement.

How did you create the Autonomous driverless car?

Building an autonomous driverless car (Steed) that could be remotely controlled was astronomical and beyond the reach of our production budget. As a result, we settled on a hybrid compromise.

For all shots where the Steed is moving, I picked a Ford F-150 XL as our base proxy vehicle. I made this pick based on preliminary designs from the art department. The Ford F-150 had the closest wheelbase to it. Art Department then amended their design to match the wheelbase of the Ford F-150 exactly so that when we replaced the proxy with the CG Steed, the turning radius and fit would be perfect.

Annie Beauchamp our production designer and Michael Diner, art director put together a team to design the Steed autonomous vehicle based on the detailed director’s brief from Ben during prep. Once we had a director approved design, art department commissioned an interior buck of the Steed to be built.

Our Special Effects Supervisor, Tony Lazarowich then built a wheeled unit for the buck to sit on that could be pulled or pushed into place. This was useful to roll the buck into place and for some shots where the car is coming to a stop with Cam in it.

The third component to the Steed was its lighting. The headlights and rear lights of the vehicle were then translated by our DP and Gaffer, David Warner into a lighting rig. The lighting rig was then attached to the buck and the Ford F-150 proxy so that the interactive lighting on the environment matched.

Next, LiDAR scan the interior buck and the Ford F-150 proxy. This allowed the team at Image Engine to track the buck and proxy with he scans and then snap on the CG Steed.

Final component for a perfect fit was reflection plates. For all shot where the car is stationary, we made sure to capture HDRI 360’s for the reflection and lighting. For shots where the car is moving, I either had a vehicle mounted 360 rig to capture moving reflections or drone mounted capture of the environment that was then later wrapped onto the vehicle for reflections. I directed all the second unit and drone shoots for the movie as most of these had VFX elements in it. As a result, I made sure to get all supporting VFX plates on location.

The logo for the Steed was designed by Pentagram.

We tweaked the exterior design of the Steed during post. Especially its headlights and rear lights with the concept team at Image Engine. Image Engine brought it all together with the final vehicle you see on screen.

The movie is full of graphics and motion design. Can you elaborate about their design and creation?

We engaged Territory Studio out of London early during prep to design all the AR screens and Memory Device graphics for the movie. Lead designer Sam Keehan led them. Ben had a detailed document on how he perceived the AR screens for the movie. It was exceptionally well researched, and he was clear that he did not want flashy screens but rooted in traditional design.

We also engaged technology and future consultants to help with world building. The AR is overlaid via Cam’s smart contact lenses. The control of the AR system is based on current research like project Soli. We spent six months working out the details of the AR systems and how to interact with it.

Once Territory developed tests that were approved by Ben, I had foam core proxies made for every single screen in three varied sizes. One at the scale we designed, one at 80% and one at 60%. When we shot AR scenes, I would place an AR screen proxy in front of the actor so that his eyeline matched and he had context to interact with. The proxy screen also helped frame the shot. Depending on the type of shot, the screen may have an iPad with content, printed content or green screen to key FG actor. We finished by shooting a clean plate to paint out the proxy and replace with CG AR.

For the content on the screens, we hired Pentagram a traditional graphic design studio’s New York team led by Natasha Jen. They designed the content layout, fonts, and style guides for the various screens.

Ben has a particular interest in polished graphic design and was involved intimately at every stage of the design process for the AR screens.

What kind of references and influences did you received for the graphics?

Ben had thought of all aspects of the movie right down to the design elements. He had detailed briefs for everything from the bots, vehicles to AR screens. He provided me with pdfs with references, mark ups and brief. From Ben’s brief, I would work with the design teams at Pentagram and Territory to come up with concepts and designs for approval.

Did you want to reveal to us any other invisible effects?

The film is full of invisible effects. Ben wanted a future world that was a realistic iteration of our current world. All VFX in the movie was planned to be invisible.

As originally scripted, the movie had a lot more bots and graphics. As the movie was cut down to its final run time, a lot of these came off the edit. To bring the film down to its current run time, Ben had to move scenes around. This caused some continuity issues in clothing. We have quite a few scenes where clothing was changed in CG to match the continuity. Shirts, woolen hats were retinted or changed to match.

As Arra Labs is in a very isolated part of the world, a lot of paint outs of civilization also happened.

Due to limitations in travel in the middle of the pandemic, some of the exterior scenes were also shot on stage. The sequence where Cam and Jack are out on rock overlooking the islands was one such example. The DP, production designer and I trekked through the woods to this area near Vancouver that had this incredible view. We had heard about it from locals. It is not a place that’s easily accessed by production vehicles. We hiked there. I then LiDAR scanned the huge rock on this location, shot detailed 360 bracketed panoramas as well as 360 plates with an ARRI to account for the moving water and trees. We sat there while the sun set till Masa had the exact lighting he wanted. A four-minute window. Marked the time and azimuth of the sun at this moment. Art department then built a set piece from my LiDAR scans of the location. Gaffer matched the lighting density and direction to the time Masa picked on location. Co3 VFX in Toronto comped the Lookout Point sequences.

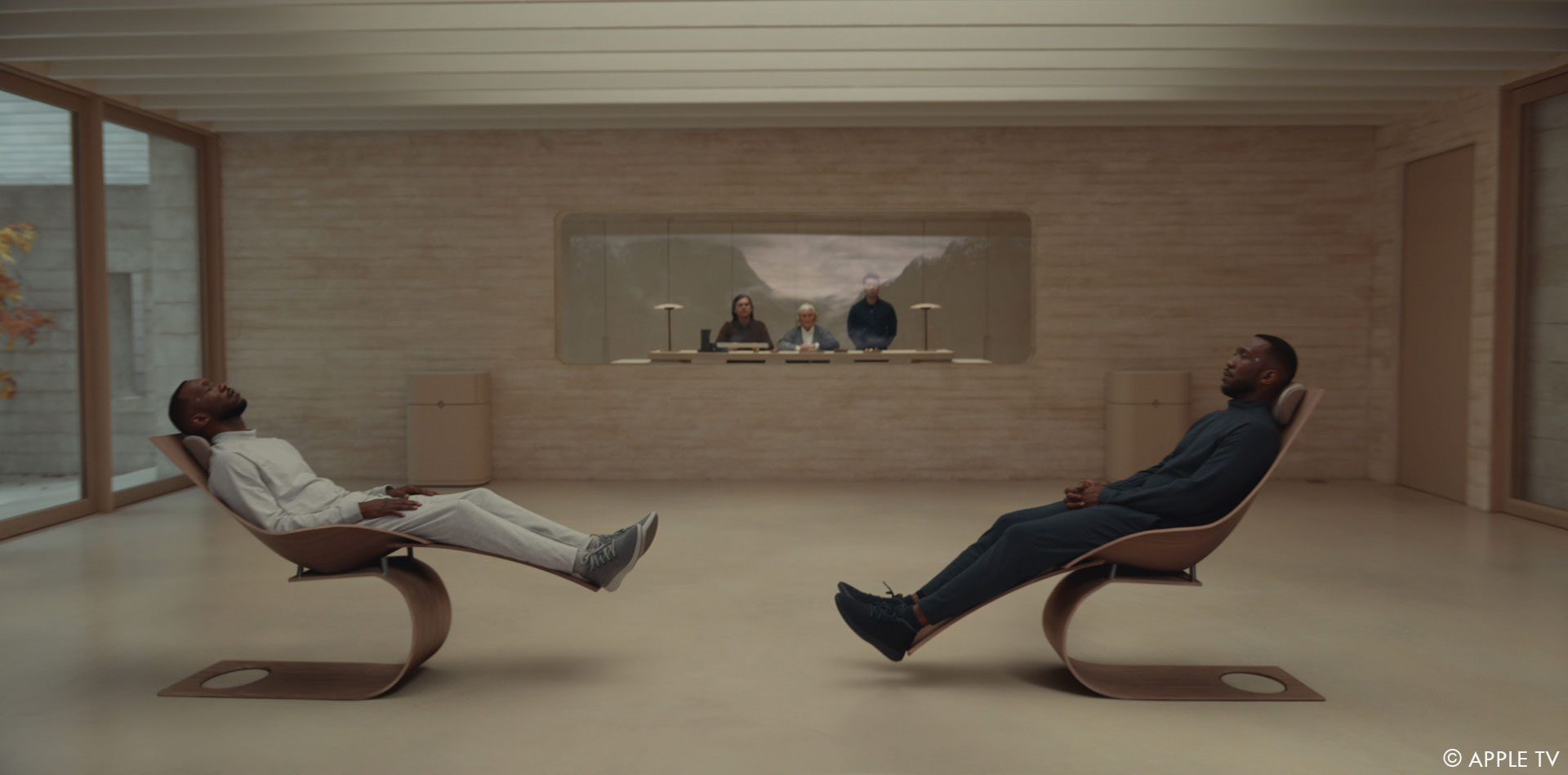

The chair in Lab One is another invisible effect. The chair is based on Tadao Ando’s Dream Chair. But Ben wanted it to swivel and have a footrest that magically slides in and out. We lack the technology now for the things this chair had to do, so we managed it as VFX. We had a SFX version of the chair for all shots where it swivels with an actor on it. The base of the rig was quite massive. This was painted out and replaced with a slim CG base. All shots where the footrest slide in and cradle the legs when it swivels was also full CG. The chair work was done by Image Engine.

Whenever we are out in the city, there are subtle city extensions done to give it a 2040 upgrade. These extensions were executed by Image Engine. Its only 20 years into the future, never overplaying our hand.

Which sequence or shot was the most challenging?

As we had thoroughly planned out every single shot for the movie, it flowed seamlessly during production. The challenges were entirely during post. Originally, we had 12 weeks to execute all the VFX for the film after turning over. However, director’s cut and picture lock were extended, and our deadline was truncated so the movie could make the awards run. This resulted in our delivery schedule being truncated to 6 weeks, which put a massive strain on vendors and editorial. This also made it hard for the director and me to review and approve shots in the way we had originally planned.

There were a lot of shows that were in post at the same time we were and there was extraordinarily little capacity at studios. To meet this deadline with the least compromise was by far the biggest challenge.

Is there something specific that gives you some really short nights?

The super truncated delivery schedule we had and the stresses that come with it still have me reeling in cold sweat.

What is your favorite shot or sequence?

The fight between Cam and Jack in Lab One is right up there. Initially we felt this was one of the most complex sequences, so we spent time studying it thoroughly. The Director, DP and I spent a lot of time designing the sequence and it was shot meticulously. It was really rewarding to see how easily it snapped together in edit.

What is your best memory on this show?

The best memories from this show were from production. It was an exceedingly difficult time in autumn 2020 when we started production with the pandemic raging. We were in Vancouver with a dream team. The pandemic forced us all closer and it was really one of the best synergies on set I have ever experienced. I looked forward to being on set with every single crew member during this time. Our Covid team was spectacular and made us all feel safe and protected.

The experience went a long way to building a close relationship with the director, producers, and other crew.

How long have you worked on this show?

I started the show in February 2020, but we were forced to go back home mid-March when the pandemic hit. It was not until September 2020 when we got back to Vancouver to pick back up. The six-month period when we were in stasis, we did work on world building and storyboarding which was immensely helpful. Delivered the movie in October 2021. Twenty months total if you count the period we stood down.

What is the VFX shots count?

551 shots in the final cut.

What is your next project?

I am considering a few projects currently. Hope to have a decision by the end of January 2022.

A big thanks for your time.

WANT TO KNOW MORE?

Screen Scene VFX: Dedicated page about Swan Song on Screen Scene VFX website.

Apple TV+: You can watch Swan Song on Apple TV+ now.

© Vincent Frei – The Art of VFX – 2022

Really great article with examples. Thanks.

Glad that you like it. Best,