In 2019, Eric Saindon detailed the work made by Weta FX on Alita: Battle Angel. Today he returns to tell us about the many challenges for Avatar: The Way of Water!

What was your feeling to be back on Pandora?

I read an early version of the script a year prior to heading back to Pandora and was very excited to return to Avatar. The new creatures and characters made it exciting and the challenge of the water and scale of the film scared the crap out of me.

How was this new collaboration with legendary Director James Cameron?

For the first Avatar, I worked mostly at Weta and interacted with Jim only at reviews. This time, my role was initially Onset VFX Supervisor for the live action filming, then I became the face-to-face contact person for Jim on a daily basis. I had an office just a couple doors down from Jim in post-production and would typically play clips on my monitor to lure him into reviews whenever he walked past. He would stop in almost every time and joked that I always had « director bait » on my monitor, but he could not help himself and always had to stop.

What were the main changes he wanted to do since the first film?

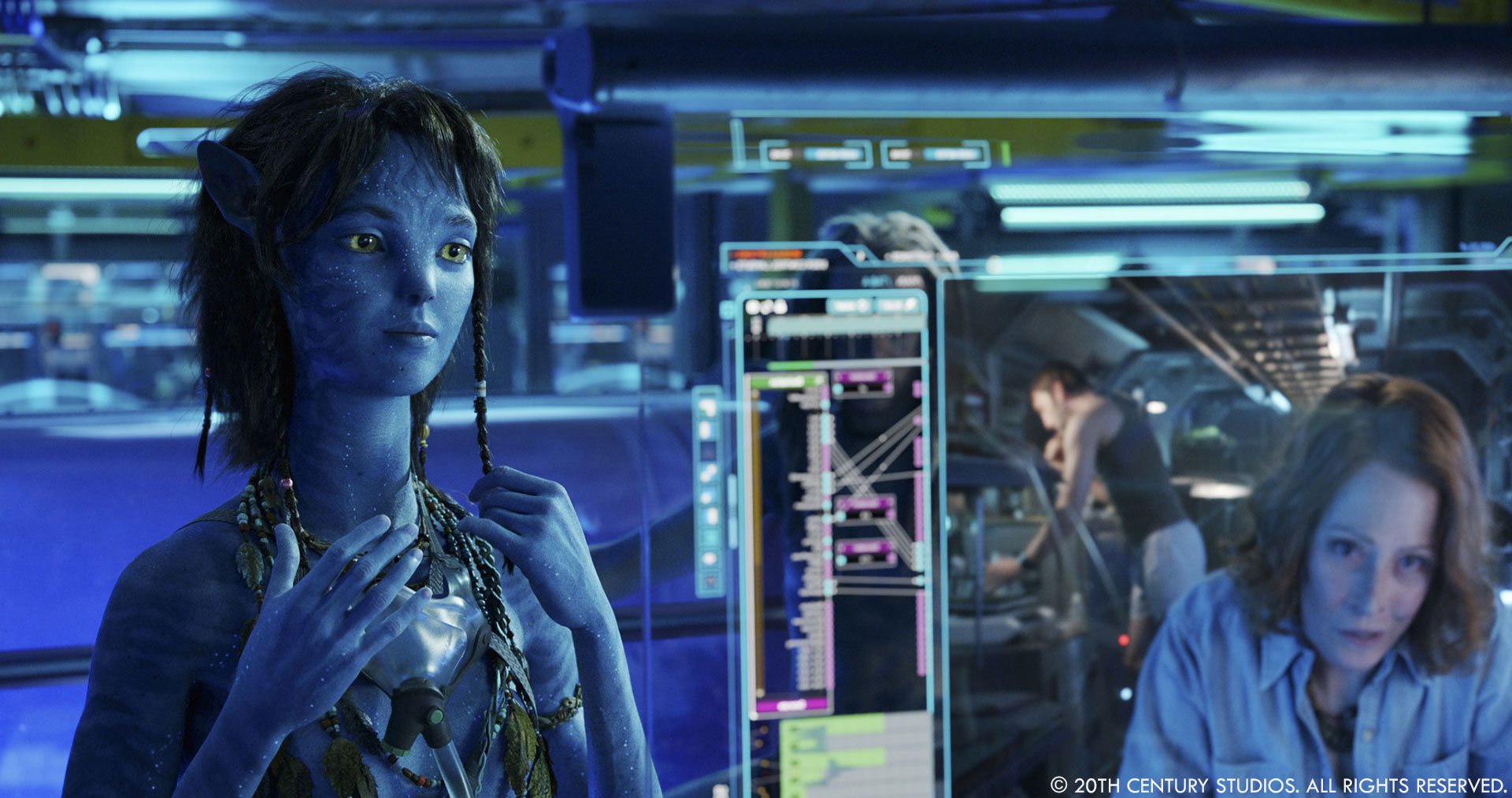

The biggest concern for Jim and Weta was getting the performances from the actors to read through the characters. We spent a lot of extra resource upgrading the facial and capture systems so we could really translate the emotion of the actors so that as the audience watches the film, they feel the emotion they should at the right times. There is nothing worse than getting distracted and losing the magic of a scene due to a bad CG character.

Can you elaborate about the Virtual Production process?

The movie was entirely shot as a virtual production which included full performances, lighting supervised by the DP, and basic FX. For each scene in the virtual production, an entire area was created so that when the actors performed, Jim could put the camera anywhere he wanted and see the world come to life. A virtual production environment is a little like a high-end video game in which you can wander around and explore the world. A representation of this is then built on the performance capture stage, so the actors have a proper set to perform on and react to. The actors’ motion is then placed onto the CG characters for cameras and FX to be added.

How did you organize the work with your VFX Producer?

Having daily catchups with all the supervisors and our Senior VFX Producer Lena Scanlan helped everyone stay up to date with new techniques and helpful process. We didn’t want any of the teams reinventing the wheel for no reason.

How did you split the work amongst the other VFX Supervisors at Weta FX?

We split up Avatar among a fair number of VFX Supervisors to try and keep similar types of work with different groups and work as efficiently as possible. One of the biggest advantages of doing it this way was we could focus the FX department on certain types of work on each team. For example Wayne Stables supervised most of the jungle work and his FX artists were able to concentrate on plant simulation and not worry about big water simulations.

Can you elaborate about the design and creation of the various Avatars?

For all of the Na’vi and Recoms, the designers incorporated elements of the actors into the characters’ designs. Incorporating elements like mouths, chins, or other features helped to translate the performance to the character and bring them to life. The underlying structure of their CG bodies is also similar, with muscle systems and bones driving the rig.

Were you able to reuse some of the assets or did you need to start almost from scratch?

We were able to use some of the base models for plants and trees but they were all built to a higher resolution. The characters were all built from scratch using the original models as a guide. The level of detail in the designs and simulations of the characters was greatly increased in the new film. For example, in the first Avatar we simulated the hair on the characters with strips, and clumps of hair were all simmed as one so they did not react with one another. In the new movie, technology updates allowed us to solve individual hairs, not only on the heads of the characters, but for their peach fuzz as it interacts with the wind or water. We were also able to understand compression and stretch for things like skin and changes to the blood flow under the surface, which in turn effect the colour of the subsurface or skin colour.

Can you elaborate about the face performance work?

The new film is the first to use our new facial system that has been in development for a few years. Up to this point, and all the way back to Gollum, we used a FACS system for our facial work. In the old model, we would create lots of sculpts of poses that characters could achieve, then the system would interpolate in between the poses to generate the facial performance. The new system, on the other hand, is fully muscle-driven and uses an AI network to help determine the proper tension for muscles and fat under the surface, driving the skin. Using this system, we achieved high-res facial performances for all of the characters in the film, instead of just a few heroes. In the new movie, you should be able to watch any background character and see the full range of emotion and performance.

This new movie is taking us underwater. Can you elaborate about the design and creation of this environment?

One of the great things about working with Jim and the whole team at LEI is the design process and the virtual production. All environments in the film were built based on designs from the art directors. Once a layout was generated, a first lighting pass was done by our team imbedded at LEI, led by Dan Cox, VFX Supervisor for Weta. Dan and his team would work with Richie Baneham to show all of the environments to Jim for approval before anything was turned over to Weta. Once it arrived at Weta, we would add all of the detail and FX to bring the world to life. The underwater sequences were a new challenge for Weta. The layouts of all the plants and animals underwater used AI-driven procedural generation when possible to help get realistic growth patterns. The other thing that helped to properly understand the underwater world was proper attenuation and scattering in the volume – attenuation creates changes in hue, scattering causes so called ‘veiling light’. If either of these is set wrong, the characters or environment loose scale or the volume looks empty, so they look like they are floating in space. Adding a little bit of marine snow was also very handy to give the sense of volume, especially when watching the film in stereo.

The other big challenge was the proper pronunciation of underwater animals like anemones. I got taught by Jim every time how to properly say it and could still never get it right… Anemone – /??nem?ni/.

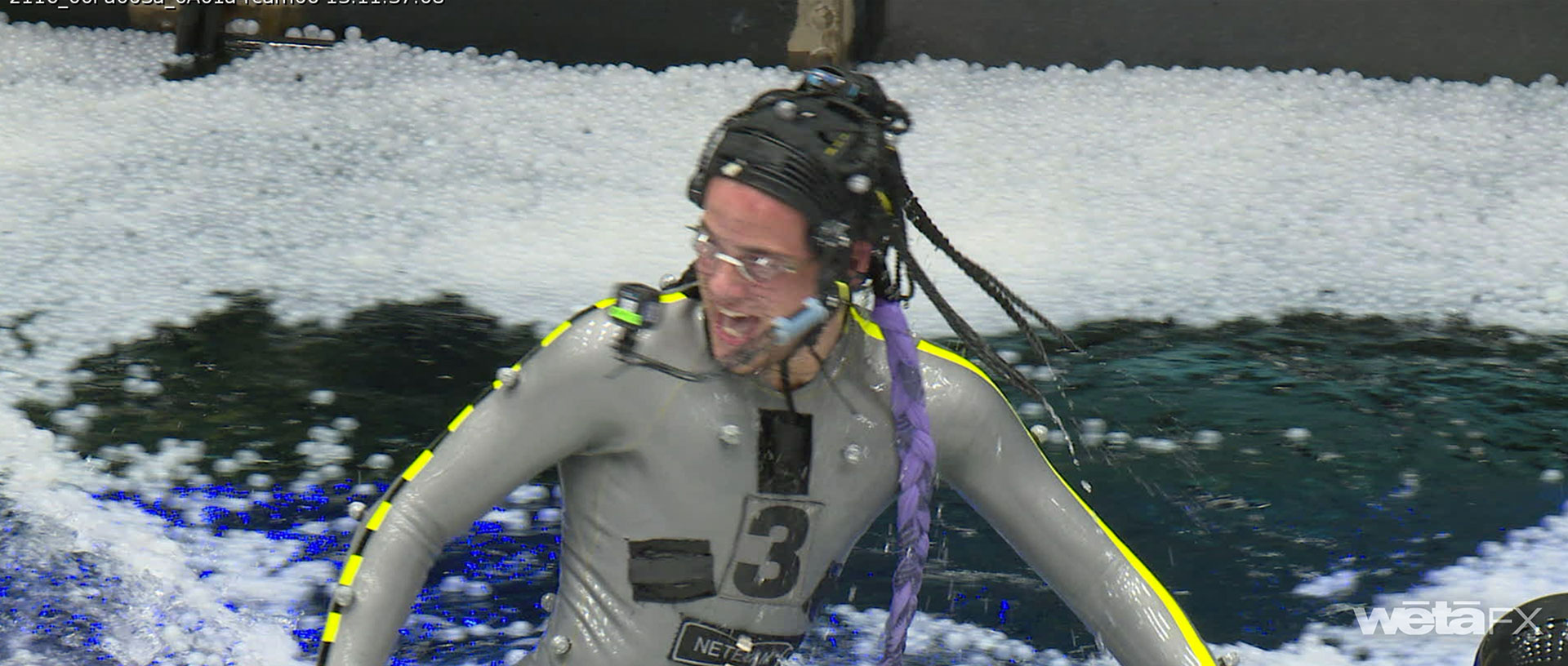

Can you tell us more about the filming process and the underwater mocap suits?

The underwater performance capture was one of the big challenges of the new film. Ryan Champny at LEI was set in charge of figuring out the best way to capture both above and below water at the same time. Two volumes were created – the above water volume was a standard setup, and the below water volume used a blue light spectrum for capture, as the red falls off so quickly under the surface. The second volume was dropped into the tank and lowered into place, then the two were synced. White ping pong balls were then used to cover the surface to stop reflections on the surface, from below and above, so the cameras were not confused by bouncing light.

We discover new creatures and fauna. Can you tell us more about them?

The Way of Water is pretty much all new creatures and fauna. We had a few plants and glimpses of animals from the first Avatar, but once the Sully family leaves High Camp, we enter a whole new Pandora. We worked closely with art directors Ben Proctor and Dylan Cole on the designs of every plant, above and below the surface, and every creature.

Can you elaborate about the design and creation of the Tulkun?

The Tulkun designs were based on a few creatures. A whale is the obvious influence, although the Tulkun are four times the size of a blue whale. When swimming at depth they swim like a whale or a dolphin, with a up and down motion of the tail. When swimming at the surface they can swim more like a sea otter, with more of a side to side using the split back fin. We also needed to include simple things like a hint of a brown and flexible flesh around the eyes to allow the animators to get emotion in the face.

Can you tell us more about the creature animation?

The creature animation was all blocked out by a team at LEI and led by one of our Animation Supervisors in LA, Eric Reynolds. Once the basic motion and intention was sorted so Jim could edit the footage, it came over to Dan Barrett and the animation team to add weight and proper anatomical motion to the creatures.

Did you used procedural tools for the fauna and the underwater plants?

Whenever possible, we use procedural tools for model generation, texturing, and even animation. Using things like AI has helped us to better understand what multiple versions of plants might look like, including how they would grow in different situations. We were also able to use things like water simulations to get proper movement from tidal swells or open ocean waves on underwater plants.

How did you work with the SFX and stunts teams for the interactions between the humans and the Avatars?

We worked closely with SPFX on things like the motion of the boats, so we could get accurate data that enabled our coupled simulations between the boats and the water to work properly. If the motion of a boat in the water was incorrect, it would cause the sims to fail as the boats would ride too low or high in the water, so the buoyancy would look incorrect.

We also worked with a stunt team that wore blue suits onset to help live action actors understand the spacing of Na’vi in a scene. New to our onset toolset was the eyeline system, which gave actors proper eyeline and timing when interacting with CG characters.

The eyeline system is second only to actually having the Na’vi there with us on set. It’s a four pick-point cable cam system that is normally used to fly a camera during sporting events. Instead, we mounted a small HD monitor and a speaker – the monitor played footage from the MOCAP performers headcam and their audio came from the speaker. The motion of the monitor was created by constraining the motion to the CG character’s skeleton and the data is synced and genlocked. This meant the live action talent could walk around and interact with a spatially accurate “fellow actor”.

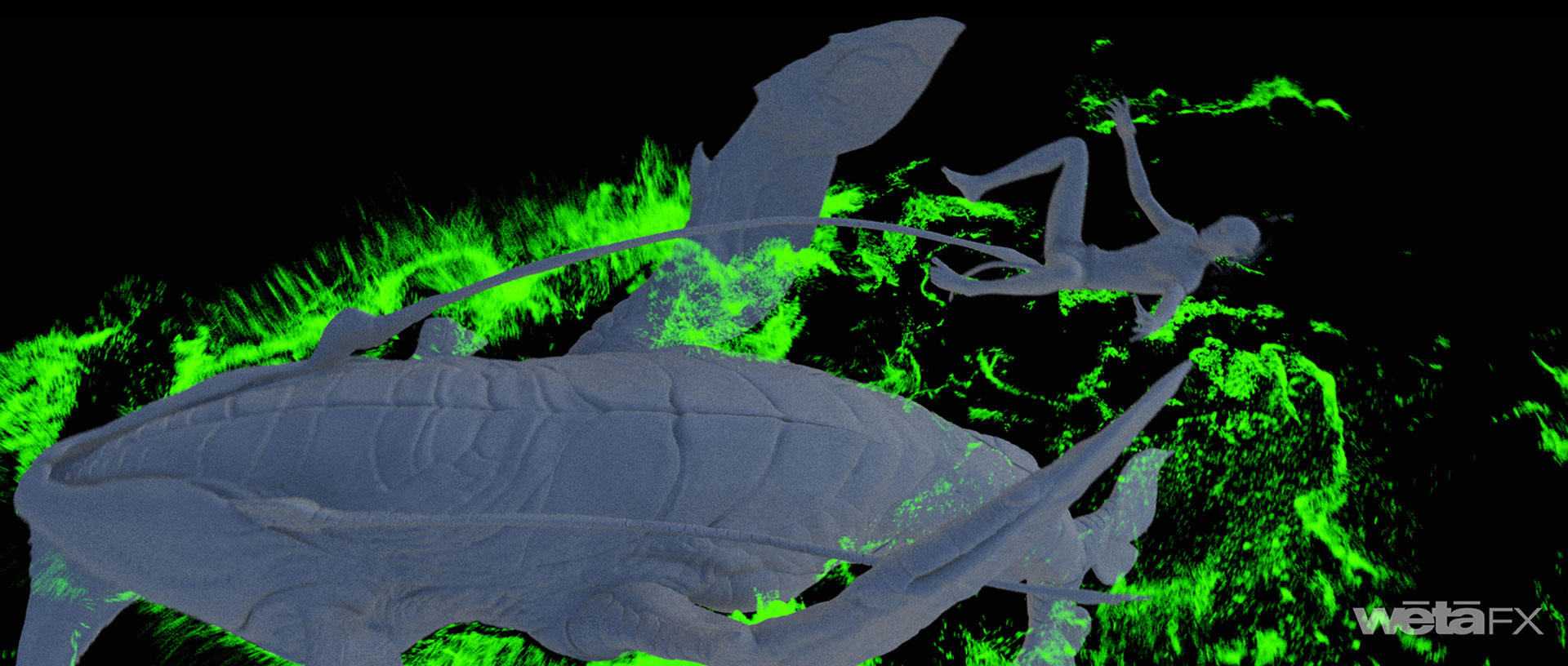

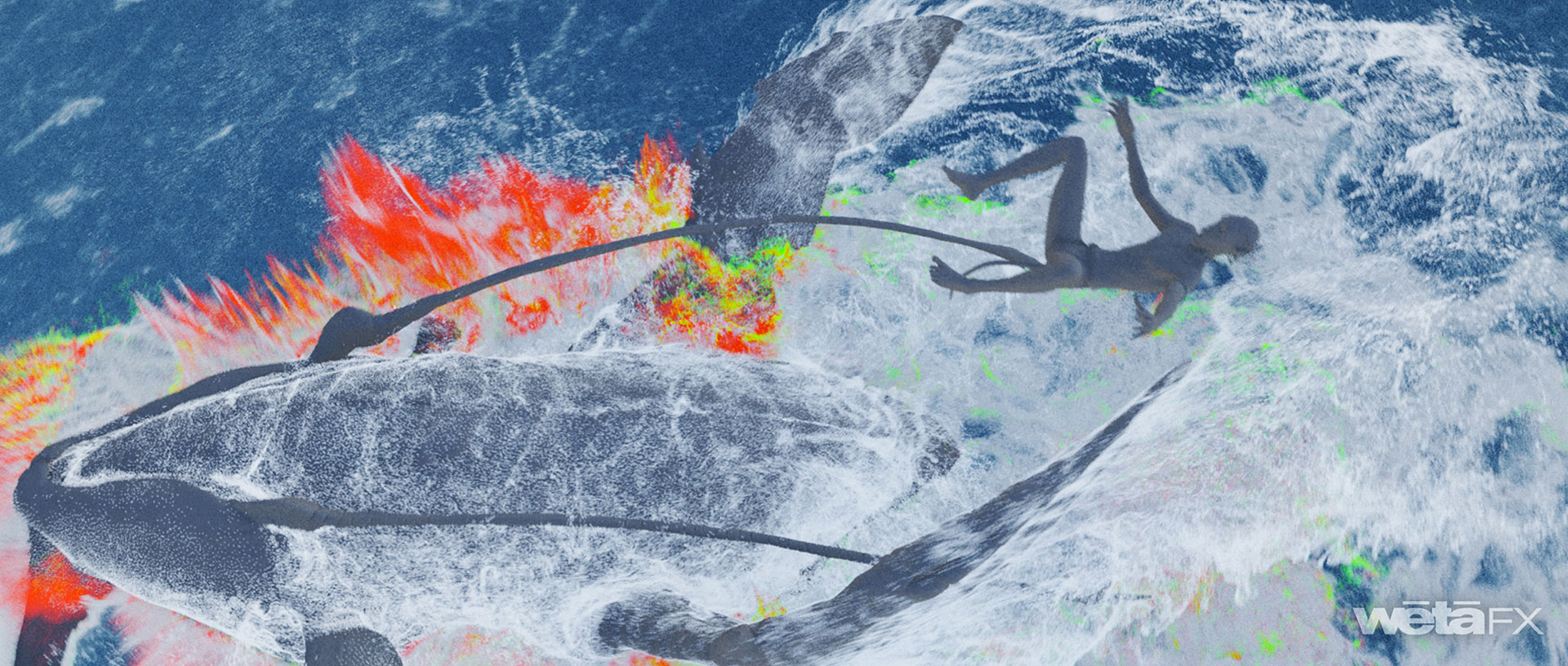

Can you elaborate about the impressive water work?

For this project, our FX team needed to expand our traditional simulation and workflow solutions to be not only be reliable and repeatable for scale, but tightly integrated with one another to match the water-based performance capture and live action. Creating over 1,600 unique water simulations required a new and flexible way of achieving the director’s vision. As a basis we used our real-time GPU-based ocean spectra to give the virtual production a physically accurate starting point to capture swell timing, water levels, and basic character interaction. A custom toolkit, Pahi, was created to convert the ocean spectra into water deformations in Houdini and then into our in-house simulation framework Loki. Secondary workflows such as bubbles, foam, and spray transitions were added for integration with vehicles, creatures, and live action elements.

How does the HFR process affects your work?

We setup our pipeline at 48FPS and did all our rendering on even frames. If Jim decided he wanted a shot at 48FPS, we would simply render the odd frames as well.

How did you work with ILM?

We shared model assets and textures with ILM. We also had several shots that were split between us, where ILM did the comps after we delivered renders of CG characters.

What is your favourite shot or sequence?

« The Tulkun Hunt » was one of the most challenging, kept me up at night, and was one of my favourite sequences in the film. The sequence was a challenge because it married practical motion data and simulated wave patterns with the CG water to ensure character movement on vessels in the open water were realistic. To match the director’s vision for marine accuracy, the onset team built full size boats and control rigs that were faster, more accurate, and complex machines that could safely move heavy physical objects around onset in a way that could be tightly integrated with the digital environments. Using Overdrive to control the 10 ton hydraulics with 6 degrees of freedom, we were able to get very authentic boat motion data. Once we had that, we were able to run water simulations for the boats and the Tulkun in one simulation to achieve realism with the water.

What is your best memory on this show?

One of my favourite memories on the show was about halfway through the live action shoot. We were shooting some Spider plates on the day and at Weta we were in progress on a few early turnover sequences. One of the sequences was the live action plate extensions and Quaritch getting on the boats. We got first versions to show Jim so I plugged my laptop into the HDR monitor in the DI tent and called Jim over for a viewing. He was floored and ended up getting all the actors and a huge portion of the crew to come over and see the footage. Unfortunately it derailed filming few a couple hours, but it was exciting to get everyone amped up.

How long have you worked on this show?

I went straight onto this show at the end of 2018 after finishing Alita: Battle Angel.

What was the size of your team?

The overall team size for this project was around 1,700 people at Weta FX.

What is your next project?

We only have 2 years to finish the next Avatar so we need to get going.

A big thanks for your time.

WANT TO KNOW MORE?

Weta FX: Dedicated page about Avatar: The Way of Water on Weta FX website.

Disney+: You can watch Avatar: The Way of Water on Disney+ (coming soon).

© Vincent Frei – The Art of VFX – 2023

How do you created the 3D plants like Thistlebud, Warbonnet, Fiddlehead, etc models like the assets from scratch and what software it is to modeling all those plants models? I also want to make CGI Pandora’s forest and its models from scratch