Dan Lemmon began his career with TERMINATOR 2 3-D at Digital Domain. At the same place, he will also work on TITANIC, FIGHT CLUB or THE LORD OF THE RINGS: THE FELLOWSHIP OF THE RING. He will work on two other THE LORD OF THE RINGS films at Weta Digital. In 2007, he is VFX supervisor for 30 DAYS OF NIGHT. Then follow up with ENCHANTED, JUMPER and AVATAR.

Can you tell us about your background before your first visual effects film?

I grew up in the 80s just as personal computers were becoming common in American households. I was fascinated with the idea of using them to make pictures, and I learned to write simple graphics programs. I was also really into movies, especially STAR WARS. My four brothers and our neighborhood friends would use our parents’ camcorders to make our own versions of STAR WARS and other popular films of the era. I had a vague idea that I wanted to work in movies when I grew up, but it was when the digital effects industry took off in the early 90s that I discovered my path into the business. I studied Industrial Design in university, which shared some skills and software with the visual effects industry, and I started interning at Digital Domain during my summer breaks. I worked on TERMINATOR 2 3-D, THE FIFTH ELEMENT, and TITANIC as part of my internships before I graduated and went to work full time.

How was your collaboration with director Rupert Wyatt?

It was a pleasure collaborating with Rupert and the rest of the film making crew. Everybody was very accommodating and wanted to make sure that we were getting what we needed from the shoot in order to make our apes look as good as possible and still deliver the film on time. We were pushing our performance capture technology in directions we hadn’t taken before, so there was a lot of experimentation and learning that had to happen on everybody’s part. We had to figure out how to get everything working on a live-action set, and that included figuring out how we were all going to work together. We needed to get good usable data out of the performance capture setup but didn’t want to slow down the production process.

This is his first movie with so many VFX. What was his approach about them?

I would describe his approach as story-centered. He was most concerned about working with Andy and the other actors to get great performances that propelled the story forward. He had a solid understanding of the tools we were using to capture those performances, but he wasn’t preoccupied with the technical particulars. He made sure we understood what he wanted from the scene trusted us to come through for him. Rupert put his attention where it really needed to be, on the actors and the story, and that carried forward from shoot to post. As we started working on shots, his main concern was that we make sure we carried all of Andy’s performance across to Caesar.

Can you explain how the scenes involving Caesar and other monkeys were shot?

We started by shooting the scenes with all of the actors – the ones that play apes and the ones that play humans – acting in the scene together. Once the director was happy with that, we would pull everybody out and shoot a clean plate to help us with painting out the ape performers later. Sometimes we would leave the human performers in the clean plate depending on the nature of the shot, but the performance of the actors was always better when everybody was playing the scene together, so that was usually the take that would end up in the movie. When we started our VFX work, we would paint out the ape performer and replace them with the digital character.

Do you reuse the tools created for KING KONG and did you develop new ones?

We took a lot of what we learned from Kong and added to it. Our fur system was completely rewritten between Kong and Apes. Our skin and hair shading models have advanced considerably since then, and our facial performance capture technology has made strides as well. Our muscle and tissue dynamics have been rewritten, and we used facial tissue simulations for the first time.

The fur of your monkeys are amazing. Can you explain how you created the fur?

Thank you! Our fur and hair grooming system is called Barbershop, and it plugs into Maya and Renderman. It was written over the last several years by Alasdair Coull and a team of 5 other programmers, with input from Marco Revelant and our Modeling department. Barbershop is a « brush » based modeling tool that allows artists to manipulate a pelt of fur while visualizing and sculpting every strand of hair that will make it to the render. We also simulated wind and inertial dynamics, especially for the big orangutan Maurice, who had matted, dreadlock-like clumps of fur on his arms and legs.

Did you have filmed real monkeys on the set for light references?

Real apes were never used on set. We did use a silicone bust that had been punched with yak and goat hair as a lighting reference, but we found that the dark-haired humans in the plates were actually better reference. We also used the standard VFX chrome and grey balls for lighting reference.

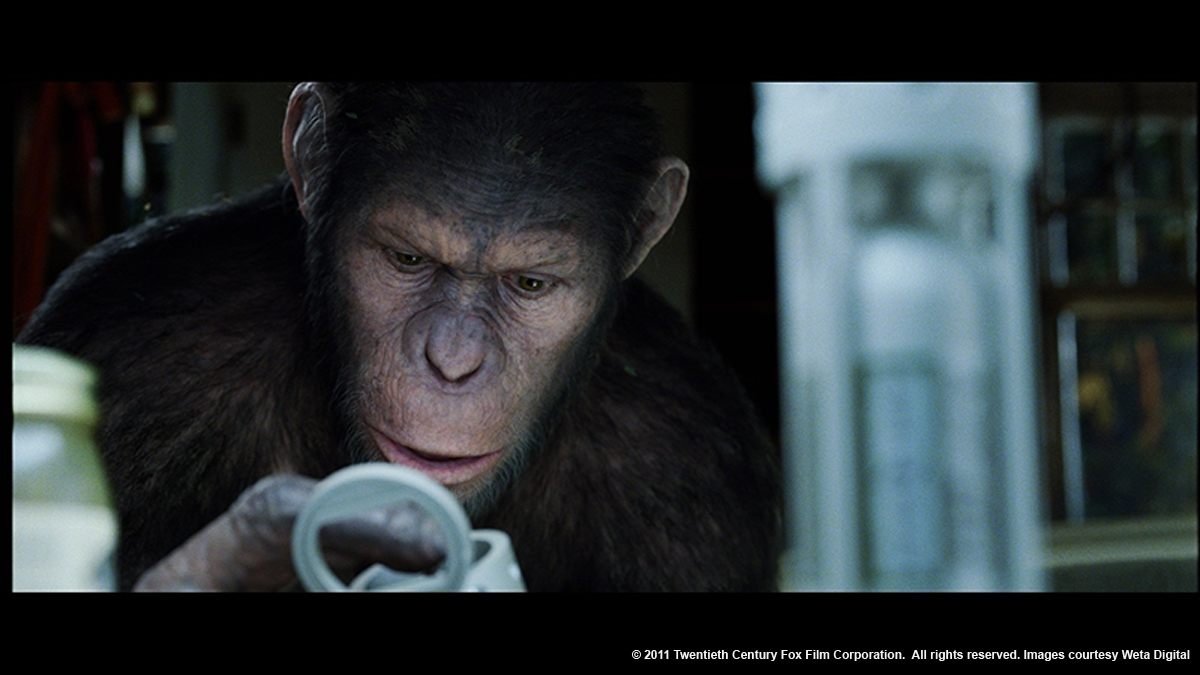

Can you explain step by step the creation of a Caesar close-up shot?

The first steps are the photography and performance capture. Andy Serkis plays through the scene with the rest of the actors, and the motion picture camera photographs them while at the same time the performance capture system records the movements of Andy’s body and face. After the director is happy with performance, we shoot a clean background. The director and the editor go away and cut the scene together, and then they come back to us with the shots they want us to add Caesar to. We scan the film and process the performance capture data, retargeting Andy’s movements onto a chimpanzee skeleton. Our motion editors and animators work with the motion and facial data, cleaning up any glitches and making sure that the motion that is coming through on Caesar matches Andy’s performance. Enhancements are done as needed and once the motion is solid, we run a muscle and tissue dynamics pass that simulates the interaction of Caesar’s bones, muscles, fat, and skin. We apply a material « palette » to the result, which contains all of the color, wrinkles, skin pores, finger prints and other fine skin details. We apply a pelt of digital fur at the same time. We then place digital lights, paying attention to match the live-action photography and also to bring out Caesar’s shape and features. We then send this package of data to our bank of computers which render the Caesar elements. Compositors take those elements and composite them into the live-action plate. Because Andy and Caesar are different shapes, there are always bits of Andy that stick out outside Caesar’s silhouette and need to be painted out, so our Paint and Roto department use the clean plate to remove any extra bits of Andy that are visible after we drop Caesar into the shot.

How did you create the impressive shot that follows Caesar crossing the whole house from the kitchen to his room in the attic? Is it full CG?

That shot began with previsualization that roughly charted the path of Caesar as he moves through the house. Unfortunately the house did not exist as a complete set – the first two levels of the house were built on one stage, and the attic was on another. Also, the constraints of the set and the camera package didn’t allow for the plate to be shot in a single piece. We ended up shooting the plate in four different pieces and stitching them back together. There was no motion control, so Gareth Dinneen, the shot’s compositor, had to do a lot of reprojections and clever blending to bridge from one plate to the next. We also used CG elements through some of the trickier transitions. Once we had a roughly assembled plate, we blocked out Caesar’s animation. We did a rough capture with Andy on a mocap stage for facial reference, but because most of the action was physically impossible for a human to do, the shot was entirely keyframed.

The eyes of monkeys are very successful. How did you get this result?

We had the assistance of an eye doctor and we used some of his equipment to study a number of different human eyes at a very fine level of detail. We used what we learned to add additional anatomical detail into our eye model, and we also put a lot of care and attention into the surrounding musculature of the eyelids and the orbital cavity to try to improve the realism of the way our eyes moved. We also studied a lot of photo and video reference of ape eyes. We did cheat in a few places – the sclera, or « whites, » of most our apes eyes are whiter and more human-like than they would be on real apes. We cheated them whiter – as we did on KING KONG – in order to make it more clear which way the apes were looking, which in turn makes it easier for the audience to read their facial expressions. We justified our cheat by attributing the whitening to a side effect of the drug that gives the apes intelligence and makes their irises green. That is why the apes at the very beginning of the film have darker eyes that are more consistent with real apes – they haven’t received the drug yet.

Can you explain the creation of the great shot of Caesar climbing to the summit trees through various conditions and seasons?

That was a completely digital shot. We created the redwood forest using partial casts and scans of real redwoods as a starting point. We wanted to make each seasonal section of the shot distinct, so we did a number of lighting and concept studies to try to change not just the weather and season of each section, but also its visual tone. Caesar goes through four different age transitions in that shot, as well. We used two distinct models and four different scales and wardrobe changes to help communicate that change.

What was the real size of the Golden Gate set? How did you recreate this huge environment?

The set was about 300 feet long by 60 feet wide, and it was surrounded on three sides by a 20 foot tall greenscreen wall. The construction department built the hand rails and sidewalk that run down both sides of the bridge, but all of the cables, stanchions, and beam work was created digitally. The surrounding Marin Headlands, Presidio, harbor, and City of San Francisco were digital as well.

Can you tell us the creation of the shot showing the monkey jumping to a helicopter?

Each of those helicopter shots was its own puzzle. Some of the shots featured a real helicopter hovering at the Golden Gate Bridge set. Other shots were completely digital. A few of the shots used a 50/50 mix where the pilot and passengers of the helicopter along with the seats and dash of the helicopter were real, but the exterior shell of the chopper and the surrounding environment were digital.

Some shots involved a huge number of monkeys. How did you manage so many creatures as it is for the animation side and the technical side with your renderfarm?

We used a combination of our Massive crowd system and brute force. For distant shots with fairly generic running and jumping action we were able to use Massive to generate the motion and render highly-optimized reduced geometry. Medium shots that required more specific actions were usually a combination of keyframe animation and Massive, and we had fewer opportunities to reduce geometric complexity.

What was the biggest challenge on this film and how did you achieve it?

The biggest challenge was making a digital chimpanzee that could engage an audience and hold their interest, even through the mostly-silent middle of the film. We saw an early cut of the film that featured Andy before we’d had a chance to replace him with Caesar. What was surprising is that after a few minutes you forgot that you were watching a man in a mocap suit. You accepted Andy as Caesar in spite of his funny costume because his performance was so good. He was totally engaging and the movie worked really well. So we knew if we could just faithfully transfer Andy’s performance onto Caesar, Caesar could carry the film. That was a big challenge, though, because Andy’s and Caesar’s bodies and especially their faces are so different from one another. It took a lot of iterating and careful study to make Caesar look like he was doing the same thing as Andy. The scene where Caesar is up against the window in the Primate Facility, and Will and Caroline are on the other side of the glass – that was a particularly challenging scene for us in terms of matching Andy’s performance. His face was going through a number of complicated combinations of facial expressions, and he kept pressing the flesh of his face up against the glass. We knew it was going to be hard, but it was also a great proving ground where we could really push the limits of our facial rig.

Was there a shot or a sequence that prevented you from sleep?

The schedule was so tight there literally wasn’t time for sleeping! The Golden Gate Bridge sequence, in particular, was difficult because of the sheer number of apes that had to be animated and rendered. Because it was a big action sequence and the apes were doing very athletic things, there was a limit to what our human performers could do. In the busy sections most of the apes had to be keyframed, and that can take a long time to get right.

How long have you worked on this film?

I spent about a year-and-a-half on the movie.

What was the size of your team?

There were roughly 600 people who worked in some way on the visual effects for this film.

What are the four films that gave you the passion of cinema?

The films that really made me want to get into visual effects were the original STAR WARS trilogy, BLADE RUNNER, and later TERMINATOR 2 and JURASSIC PARK. JURASSIC PARK, in particular, was a big influence because it redefined what was possible in terms of bringing creatures to life on the screen. I was 17 at the time, and I remember sitting in the theater with my jaw on floor, thinking to myself, « THAT is what I want to do with my life.

A big thanks for your time.

// WANT TO KNOW MORE?

– Weta Digital: Official website of Weta Digital.

© Vincent Frei – The Art of VFX – 2011

Hey owesum man , i like it ……..