In 2020, Robert Winter explained the work of Framestore on Lady and The Tramp. He then worked on Army of the Dead.

How did you and Framestore get involved on this show?

VFX Supervisor Pete Travers, VFX Producer Tricia Mulgrew and Producer Carsten Lorenz visited Framestore’s Montreal studio in early 2019. They were looking to have us work on the “space” sequences and the Nano Swarm. I found it a bit ironic that we would be creating VFX for a Roland Emmerich film without delivering a single shot of Earth destruction. However, the Nano Swarm sounded very intriguing and we would get a fair share of destruction shots, only we would be destroying the surface of the Moon instead of Earth. After that first visit the pandemic started and delayed our participation on the project for another 16 months.

How was the collaboration with Director Roland Emmerich and Production VFX Supervisor Peter G. Travers.?

They were great collaborators. They had a very clear vision for each shot and they looked to us to present creative solutions that could make the shots even better.

What were their expectations and approach about the visual effects?

By the time we came on board the project, Roland and Pete had established the fundamental concepts for Nano Swarm and space scenes through previs. They asked us to focus on establishing the scope and scale of the scenes as that is difficult to convey in the previs process. We selected some key shots for each scene to drive our development and methodologies and dove in head first.

How did you organize the work with your VFX Producer?

The average complexity for our shots was very high, so we knew the life-cycle of each shot would be long. That presented a challenge as these complex shots typically use the most resources. Our Producer, Sophie Carroll was excellent at adapting our crew and schedule to maximise the amount of time we had to refine our full slate of complex shots.

How was split the work between the Framestore offices?

All of Framestore’s work was done in Montreal.

What are the sequences made by Framestore?

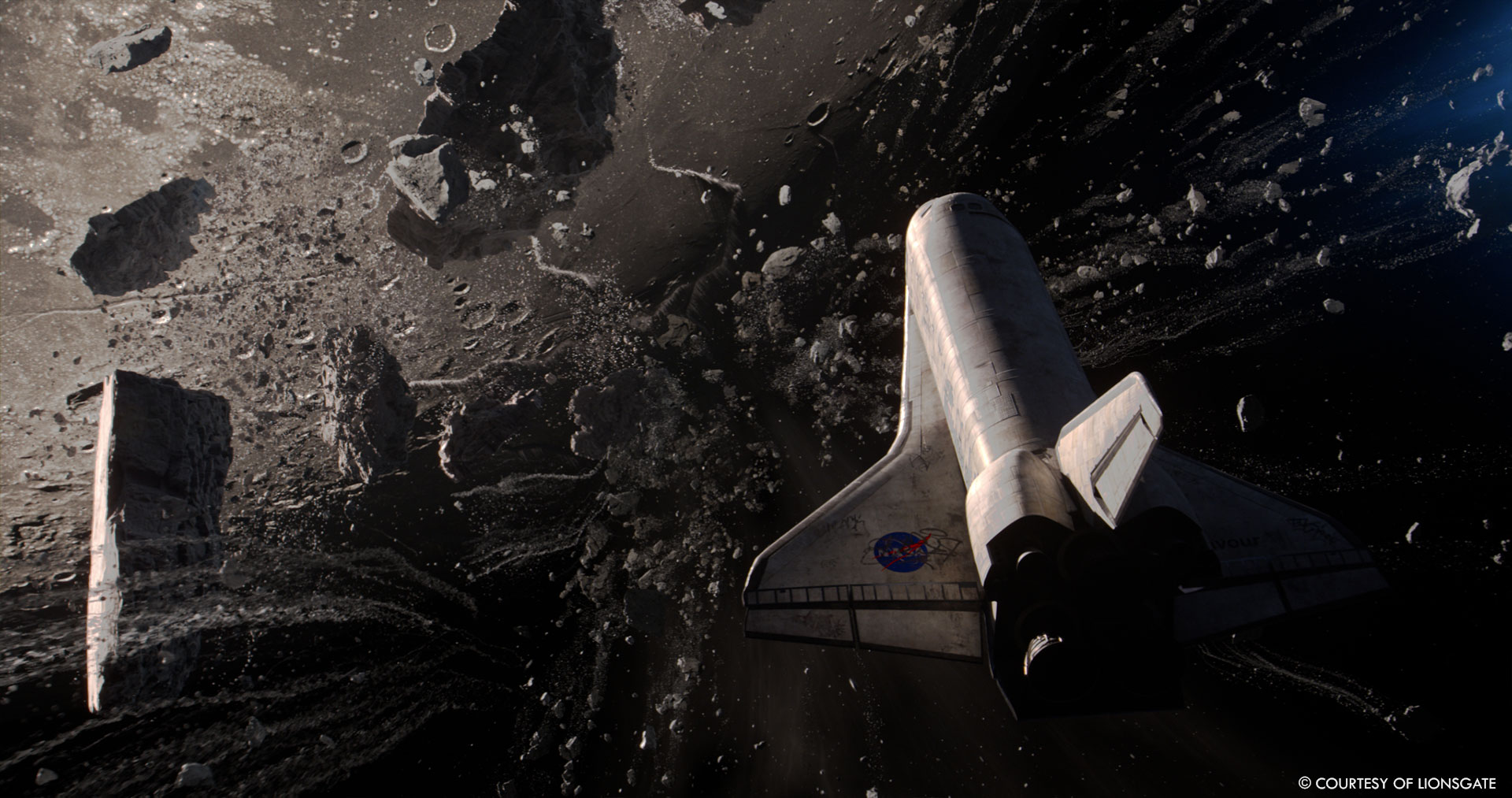

The shuttle mission sequence that opens the film; The reconnaissance mission that ended badly for the 3 astronauts in the capsule; The shuttle launch (after they are airborne); The arrival at the hole in the Moon; The rover bait sequence including the attack of the nano swarm; The descent towards the Moon hole (until the cross the threshold of the Moon surface)

How was filmed the space sequence?

Stunts used mostly parallelograms to float the actors. For the launch and the flight into the moon we generated content from the previs scenes to project on LED panels. This provided interactive light that matched the action in the previs. We generated a library of lighting elements that the DP, Robby Baumgartner could use as lighting cues on the panels. He could layer elements as needed and then adjust the timing on the fly. It was a very flexible process.

Can you elaborates about the creation of the space shuttle?

We tried to build an authentic replica of the Endeavour space shuttle. Our asset team took the challenge to heart. Our texture lead Marce-Andre Dostie even used a random ID generator to create textures with unique serial numbers for every heat tile on the shuttle. It was a really clever way of getting that level of complexity with little overhead. We also had a lot of fun creating original graffiti for the variation of shuttle that was used later in the film.

How did you create the digital doubles for the astronauts?

We worked from scans of the actors in the spacewalk suits. The lookdev on the suits was tricky due to the complex surface properties of the suit material. We also used the doubles to add the glass visors to the helmets. The actors could not breathe with the visors down on set, so we added all the visors in post.

How did the experience of Framestore on movies like Gravity and The Midnight Sky was useful for this show?

It is always great to build on the experience from our previous projects. We try to leverage as much as we can, but our tools are changing so fast, that much of the software and techniques used to create Gravity have since been deprecated. The Midnight Sky was still in post production when we started working on Moonfall, so the timing did not work for us to leverage the same crew.

How did you create the Earth and the Moon?

Our Earth surface was generated using satellite images. The atmosphere is rendered with our volume shader that is built on a physically accurate model. We reliably get photorealistic results when we render Earth on a global scale. We built multiple Moon surfaces depending on the scene. For the scenes that took place near the hole in the moon, we had to build a highly detailed area with multiple stages of destruction. Our FX team ran these Moon surfaces through destruction simulations producing a range of elements from surface cracks to Moon chunks the size of a city block.

Can you elaborate about the design and the creation of the Swarm?

The concept for the nano swarm was presented to us as AI created by an alien race that can take any form it requires to achieve its goals. Since it was alien tech, it was not required to follow our current understanding of physics. Whenever I get a brief like that, I know we are in for a challenge.

What kind of references and influences did you received for the Swarm?

The two natural phenomena that appealed to Roland for the motion of the Nano Swarm were bird murmurations and ferrofluids. Murmurations are flocks of birds moving together. The motion produced by these murmurations is both beautiful and complex. Roland wanted us to capture that in the Nano Swarm. Roland also wanted us to apply some of the characteristics of ferrofluid to the Nano Swarm. Specifically, the idea that a material can move with an organic fluid motion, then quickly transition to an organised structure and shape.

How did you manage to animate so many elements?

Our process for animating the Swarm was a layered approach. Our animators used a custom rig to define the overall timing, speed and shape of the Swarm. That was handed to our FX team, who used that to simulate the individual nano components of the Swarm.

What was the main challenge with the Swarm?

Because the Nano Swarm was ultimately a simulation of millions of small components, the division of responsibilities between the Animators and the FX artists was blurry. When working on a more traditional creature, our Animators can refine a performance until the animation is approved and then pass it to the downstream departments. Early in our testing, we realised that the Animators would have a difficult time reviewing their work without first running a simulation of the Nano Swarm particles. Our FX Lead for the Nano Swarm, Eli Titas and our FX Supervisor, Kevin Browne, put together a slick process that enabled the Animators to preview their animation with the simulation applied to the Nano Swarm. That development was key to delivering our Nano Swarm shots.

How does his dark aspect affects your lighting work?

A very astute question. How do you see a creature made of black material in the blackness of space? This was the second biggest challenge with the Nano Swarm. We first tried to solve it in the composition of each shot. If we could compose the shot so Earth was behind the Nano Swarm we knew it would work because we could see a strong silhouette of the creature. However, there were still shots that needed a lighting solution. One lighting technique we employed to get some variation and lighting complexity into the creature was to simply make it more reflective. That allowed us to get more creative with the lighting. For example, we would make the Earth or Moon bigger in the scene. That would create more reflections on the creature which would produce a similar result as adding a bounce light.

After the impact, there are a lot of element of destructions. How did you manage these FX elements?

Since all our work at Framestore was in space, we did not have any shots of Earth being destroyed, but we did get to destroy the Moon surface. Our FX Supervisor, Kevin Browne, along with our FX Leads Tangi Vaillant and Irnes Cavkic established our workflow for all of the elements relating to the Moon destruction. We had a large amount of wide shots with hundreds of millions Moon chunks floating in space. We were really trying to sell the scale of these shots by adding more details. We were constantly hitting the ceiling in terms of how many objects we could fit in a shot. However, the FX team always found a way to make it work.

Which shot or sequence was the most challenging?

The scene that reveals the Moon’s origin. It was our most complex scene and it had the shortest delivery schedule. The scope of the scene was huge. It was a visual exposition explaining the hollow moon theory. The entire scene covered millions of years. It was very ambitious.

Is there something specific that gives you some really short nights?

The timelapse shot of Earth’s creation that played for nearly a minute of screen time. There are always resource challenges that can derail a shot like that.

What is your favorite shot or sequence?

I like the scene of the Endeavour arriving at the Moon hole. Seeing the Moon surface getting torn apart from the effects of Earth’s gravity is a unique visual experience.

What is your best memory on this show?

We delivered all of our shots working remotely as a team for Moonfall. During the project, we got together in person for a picnic at a park in Montreal. It was so great to share some laughs in person. It is the little things these days.

How long have you worked on this show?

12 months.

What’s the VFX shots count?

We delivered 180 shots from Framestore.

What was the size of your team?

We had around 100 crew members.

What is your next project?

TBD.

A big thanks for your time.

// Moonfall – First 5 Minutes Opening Scene

WANT TO KNOW MORE?

Framestore: Dedicated page about Moonfall on Framestore website.

© Vincent Frei – The Art of VFX – 2022